LexiLearn Hub

Your knowledge base for mastering AI prompting, exploring new techniques, and staying ahead in the world of artificial intelligence.

understanding how to effectively debug and refine your prompts is an essential skill for anyone working with Large Language Models (LLMs).

Modern Large Language Models (LLMs), such as GPT-4.1, boast impressively large context windows, often capable of processing up to 1 million tokens. This expansive capacity is incredibly useful for tasks involving significant amounts of information

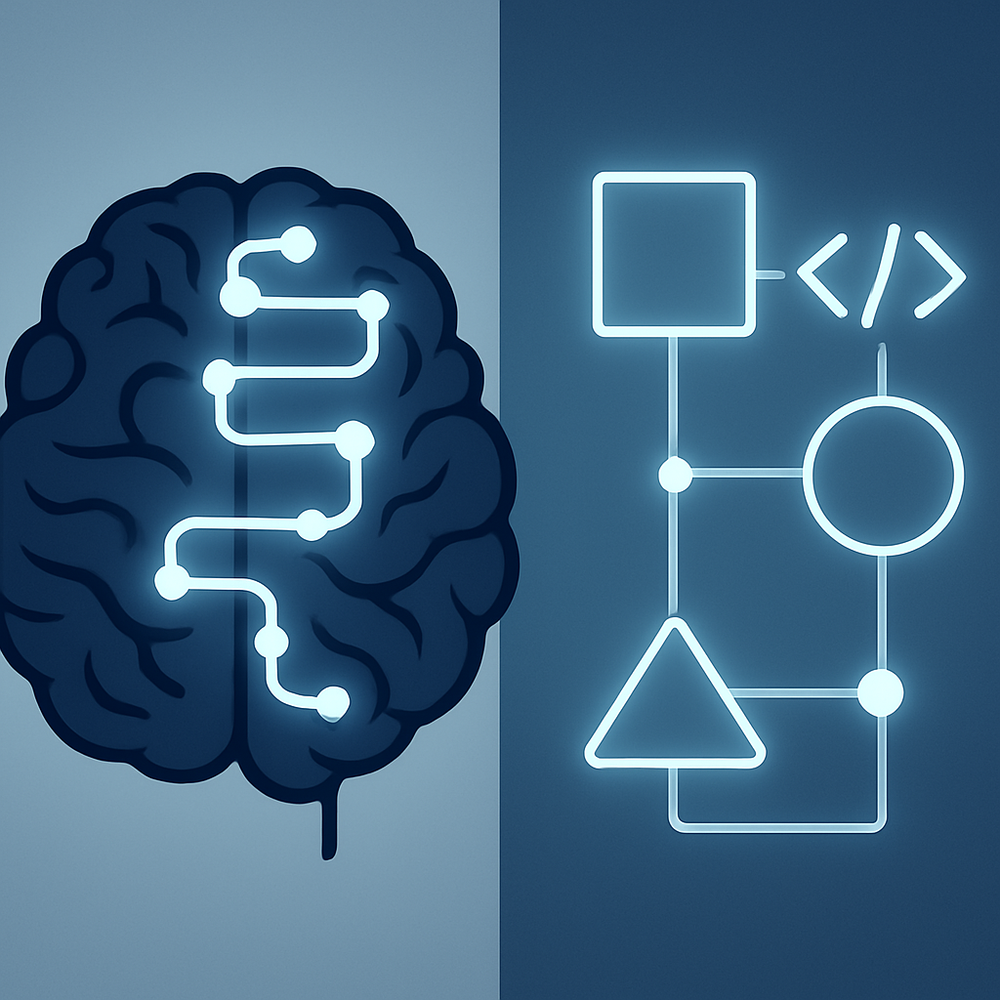

This article will explore two powerful intermediate techniques: Chain-of-Thought (CoT) Prompting and Structured Formatting with Delimiters.

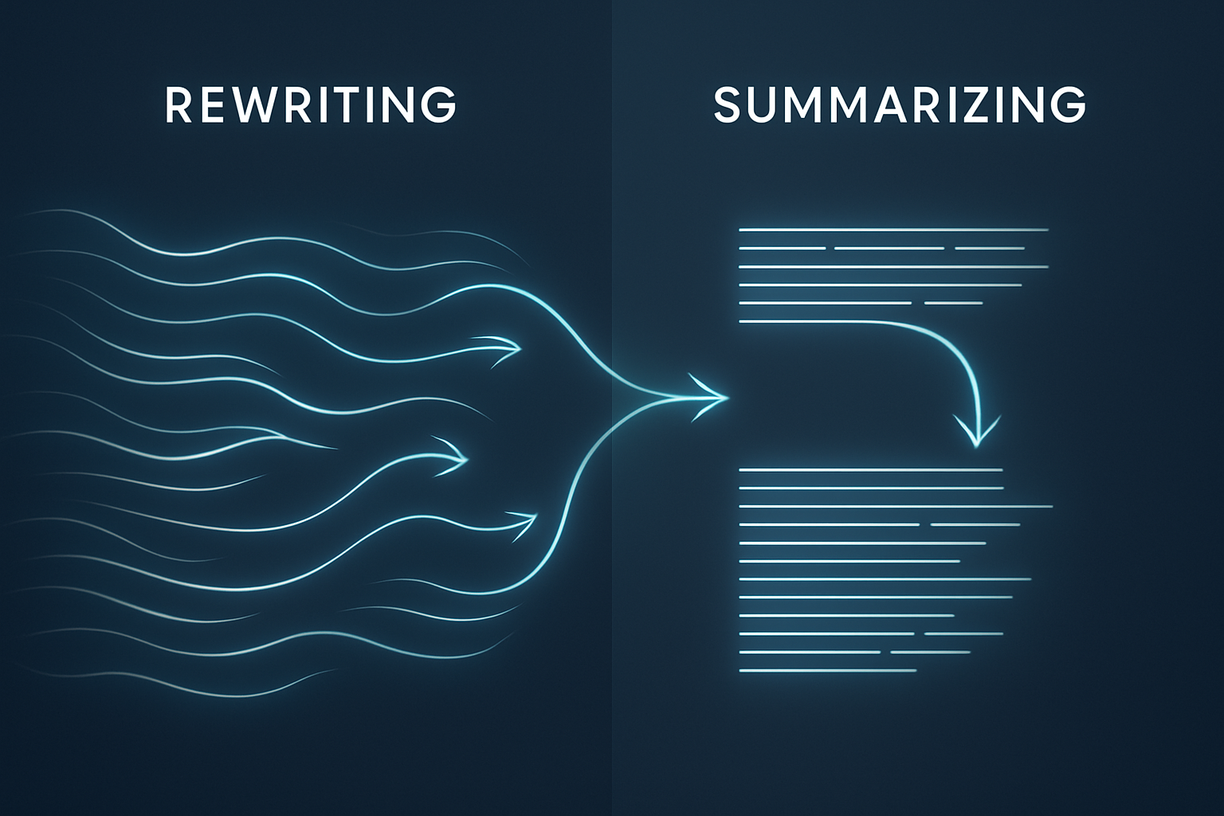

performing specific tasks on existing text. This article will delve into two fundamental task-specific prompting techniques: rewriting and summarizing

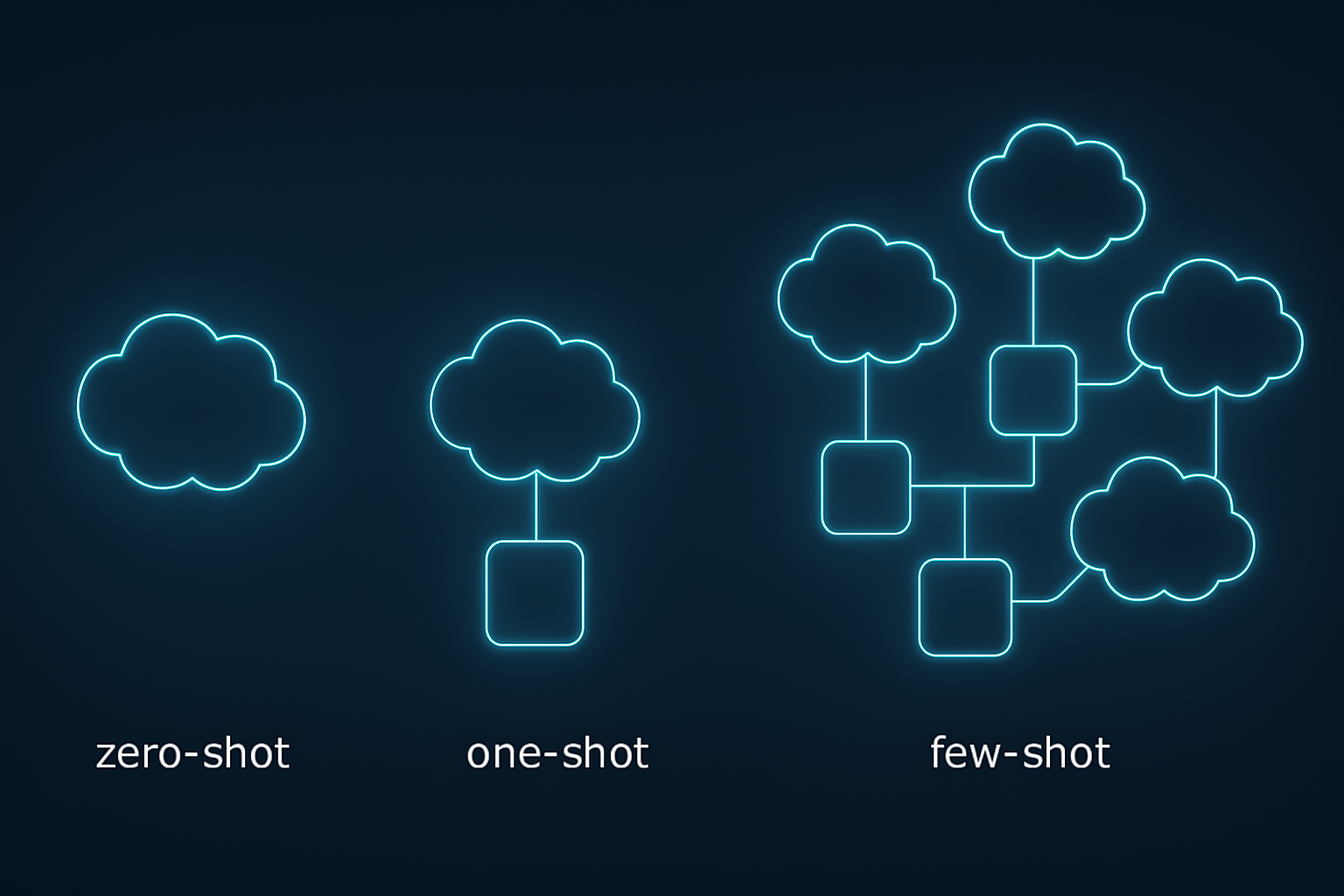

Large Language Models (LLMs) like ChatGPT possess an impressive ability to generalize from their vast pre-training data, often providing coherent and relevant responses even without explicit examples. This inherent capability is what makes them so powerful and versatile

In the dynamic landscape of Artificial Intelligence, particularly when interacting with Large Language Models (LLMs), the quality of the output is directly proportional to the quality of the input.